Strategies for the Allocation of Memory

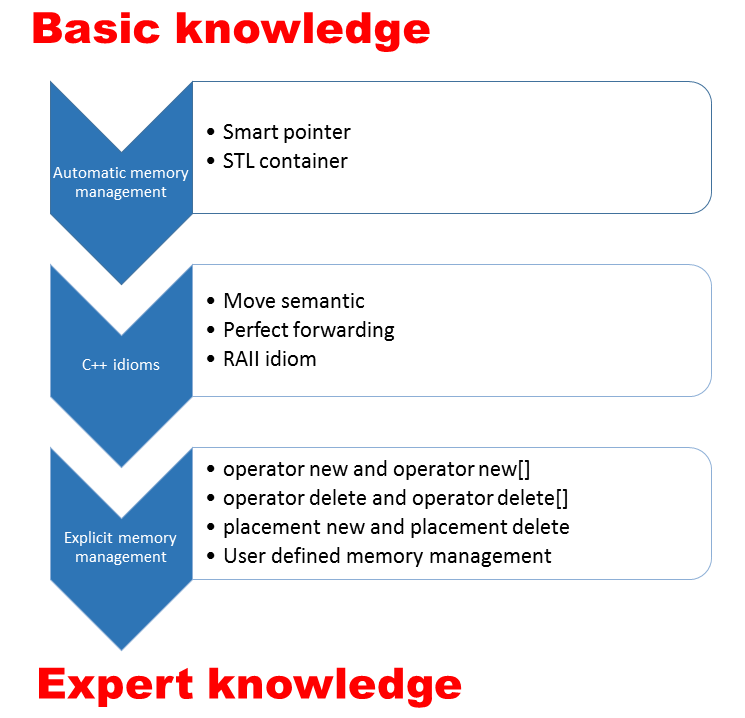

There are a lot of different strategies for allocating memory. Programming languages like Python or Java request their memory from the heap at runtime. Of course, C or C++ also has a heap but prefers the stack. But these are by far not so only strategies for allocating memory. You can preallocate memory at the program’s start time as a fixed block or a pool of memory blocks. This preallocated memory can afterward be used at the runtime of your program. But the critical question is: What are the pros and cons of the various strategies to allocate memory?

At first, I want to present four typical memory strategies.

Strategies for the allocation of memory

Dynamic allocation

Dynamic allocation, also called variable allocation, is known to each programmer. Operations like new in C++ or malloc in C request the memory when needed. On the contrary, calls like delete in C++ or free in C release the memory when not needed anymore.

int* myHeapInt= new int(5); delete myHeapInt;

The subtle difference in the implementation is whether the memory will be automatically released. Languages like Java or Python know general garbage collection, C++ or C is contrary not. (Only for clarification: That is not totally true because we have the Boehm–Demers–Weiser conservative garbage collector that can be used as a garbage collecting replacement for C malloc or C++ new. Here are the details: https://www.hboehm.info/gc/).

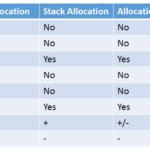

Dynamic memory management has a lot of pros. You can automatically adjust the memory management to your needs. The cons are that there is always the danger of memory fragmentation. In addition, a memory allocation can fail or take too much time. Therefore, many reasons speak against dynamic memory allocation in highly safety-critical software that requires deterministic timing behavior.

Smart Pointers in C++ manage their dynamic memory by objects on the stack.

Stack allocation

Stack allocation is also called memory discard. The critical idea of stack allocation is that the objects are created in a temporary scope and are immediately freed if the objects go out of scope. Therefore, the C++ runtime takes care of the lifetime of the objects. The scope is typically areas like functions, objects, or loops. But you can also create artificial scopes with curly braces.

{

int myStackInt(5);

}

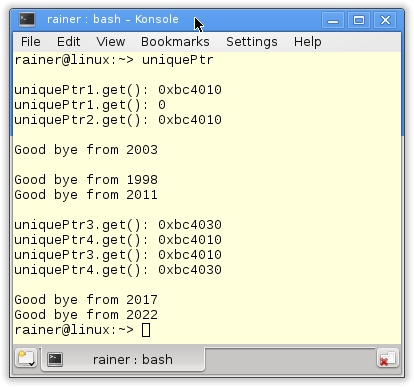

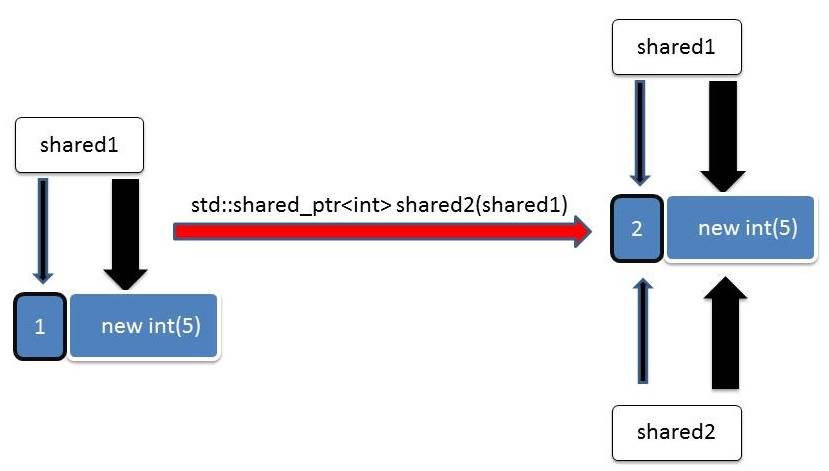

A beautiful example of stack allocation is the smart pointers std::unique_ptr and std::shared_ptr. Both are created on the stack to care for objects created on the heap.

But what are the benefits of stack allocation? At first, memory management is easy because the C++ runtime manages it automatically. In addition, the timing behavior of memory allocation on the stack is deterministic. But there is a big disadvantage. On the one hand, the stack is smaller than the heap; on the other hand, the objects should often outlive their scope, and C++ supports no dynamic memory allocation on the stack.

Static allocation

Most of the time, static allocation is called fixed allocation. The key idea is that at runtime, required memory will be requested at the start time and released at the shutdown time of the program. The general assumption is that you can precalculate the memory requirements at runtime.

char* memory= new char[sizeof(Account)]; Account* a= new(memory) Account; char* memory2= new char[5*sizeof(Account)]; Account* b= new(memory2) Account[5];

Objects a and b in this example are constructed in the preallocated memory of memory and memory2.

What are the pros and cons of static memory allocation? In hard real-time driven applications, in which the timing behavior of dynamic memory is no option, static allocation is often used. In addition, the fragmentation of memory is minimal. But of course, there are pros and cons. Often it is not possible to precalculate the application’s memory requirements upfront. Sometimes your application requires extraordinary memory at runtime. That may be an argument against static memory allocation. Of course, the start time of your program is longer.

Modernes C++ Mentoring

Modernes C++ Mentoring

Do you want to stay informed: Subscribe.

Memory pool

Memory pool, also called pooled allocation, combines the predictability of static memory allocation with the flexibility of dynamic memory allocation. Similar to the static allocation, you request the memory at the start time of your program. In opposite to the static allocation, you request a pool of objects. At runtime, the application uses this pool of objects and returns them to the pool. If you have more the one typical size of object, it will make sense to create more than one memory pool.

What are the pros and cons? The pros are quite similar to the pros of static memory allocation. On the one hand, there is no memory fragmentation; on the other hand, you have predictable timing behavior of the memory allocation and deallocation. But there is one significant advantage of memory pool allocation to static allocation. You have more flexibility. This is for two reasons. The pools can have different sizes, and you can return memory to the pool. The cons of memory pools are that this technique is quite challenging to implement.

What’s next?

Sometimes, I envy Python or Java. They use dynamic memory allocation combined with garbage collection, which is fine. All? All four presented techniques are used in C or C++ and offer a lot. In the next post, I will closely examine the difference between the four techniques. I’m particularly interested in predictability, scalability, internal and external fragmentation, and memory exhaustion.

Thanks a lot to my Patreon Supporters: Matt Braun, Roman Postanciuc, Tobias Zindl, G Prvulovic, Reinhold Dröge, Abernitzke, Frank Grimm, Sakib, Broeserl, António Pina, Sergey Agafyin, Андрей Бурмистров, Jake, GS, Lawton Shoemake, Jozo Leko, John Breland, Venkat Nandam, Jose Francisco, Douglas Tinkham, Kuchlong Kuchlong, Robert Blanch, Truels Wissneth, Mario Luoni, Friedrich Huber, lennonli, Pramod Tikare Muralidhara, Peter Ware, Daniel Hufschläger, Alessandro Pezzato, Bob Perry, Satish Vangipuram, Andi Ireland, Richard Ohnemus, Michael Dunsky, Leo Goodstadt, John Wiederhirn, Yacob Cohen-Arazi, Florian Tischler, Robin Furness, Michael Young, Holger Detering, Bernd Mühlhaus, Stephen Kelley, Kyle Dean, Tusar Palauri, Juan Dent, George Liao, Daniel Ceperley, Jon T Hess, Stephen Totten, Wolfgang Fütterer, Matthias Grün, Phillip Diekmann, Ben Atakora, Ann Shatoff, Rob North, Bhavith C Achar, Marco Parri Empoli, Philipp Lenk, Charles-Jianye Chen, Keith Jeffery, Matt Godbolt, Honey Sukesan, bruce_lee_wayne, Silviu Ardelean, schnapper79, Seeker, and Sundareswaran Senthilvel.

Thanks, in particular, to Jon Hess, Lakshman, Christian Wittenhorst, Sherhy Pyton, Dendi Suhubdy, Sudhakar Belagurusamy, Richard Sargeant, Rusty Fleming, John Nebel, Mipko, Alicja Kaminska, Slavko Radman, and David Poole.

| My special thanks to Embarcadero |  |

| My special thanks to PVS-Studio |  |

| My special thanks to Tipi.build |  |

| My special thanks to Take Up Code |  |

| My special thanks to SHAVEDYAKS |  |

Modernes C++ GmbH

Modernes C++ Mentoring (English)

Rainer Grimm

Yalovastraße 20

72108 Rottenburg

Mail: schulung@ModernesCpp.de

Mentoring: www.ModernesCpp.org

Leave a Reply

Want to join the discussion?Feel free to contribute!