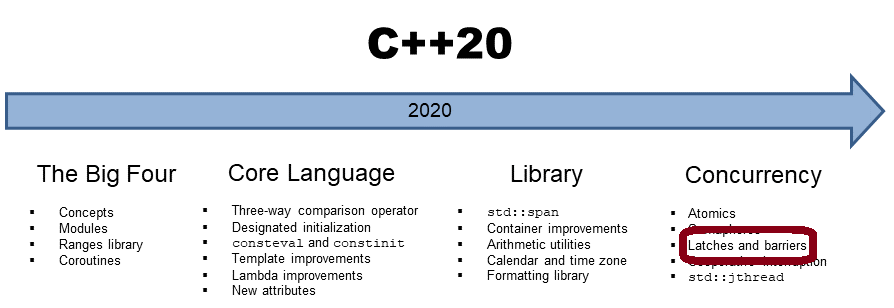

Latches And Barriers

Latches and barriers are simple thread synchronization mechanisms, enabling some threads to wait until a counter becomes zero. We will, presumably in C++20, get latches and barriers in three variations: std::latch, std::barrier, and std::flex_barrier.

First, there are two questions:

- What are the differences between these three mechanisms to synchronize threads? You can use a std::latch only once, but you can use a std::barrier and a std::flex_barrier more than once. Additionally, a std::flex_barrier enables you to execute a function when the counter becomes zero.

- What use cases do latches and barriers support that can not be done in C++11 and C++14 with futures, threads, or condition variables in combinations with locks? Latches and barriers provide no new use cases, but they are much easier to use. They are also more performant because they often use a lock-free mechanism internally.

Now, I will have a closer look at the three coordination mechanisms.

std::latch

std::latch is a counter that counts down. Its value is set in the constructor. A thread can decrement the counter by using the method thread.count_down_and_wait and wait until the counter becomes zero. In addition, the method thread.count_down only decreases the counter by one without waiting. std::latch has further the method thread.is_ready to test if the counter is zero, and it has the method thread.wait to wait until the counter becomes zero. You cannot increment or reset the counter of a std::latch. Hence you can not reuse it.

For further details to std::latch read the documentation on cppreference.com.

Here is a short code snippet from proposal n4204.

Modernes C++ Mentoring

Modernes C++ Mentoring

Do you want to stay informed: Subscribe.

1 2 3 4 5 6 7 8 9 10 11 12 |

void DoWork(threadpool* pool) { latch completion_latch(NTASKS); for (int i = 0; i < NTASKS; ++i) { pool->add_task([&] { // perform work ... completion_latch.count_down(); })); } // Block until work is done completion_latch.wait(); } |

I set the std::latch completion_latch in its constructor to NTASKS (line 2). The thread pool executes NTASKS (lines 4 – 8). The counter will be decreased at the end of each task (line 7). Line 11 is the barrier for the thread running the function DoWork and, hence, for the small workflow. This thread has to wait until all tasks have been done.

The proposal uses a vector<thread*> and pushes the dynamically allocated threads onto the vector workers.push_back(new thread([&] {. That is a memory leak. Instead, you should put the threads into a std::unique_ptr or directly create them in the vector: workers.emplace_back[&]{ . This observation holds for the example to the std::barrier and the std::flex_barrier.

std::barrier

A std::barrier is quite similar to a std::latch. The subtle difference is that you can use a std::barrier more than once because the counter will be reset to its previous value. Immediately after the counter becomes zero, the so-called completion phase starts. This completion phase is in the case of a std::barrier empty. That changes with a std::flex_barrier. std::barrier has two exciting methods: std::arrive_and_wait and std::arrive_and_drop. While std::arrive_and_wait waits at the synchronization point, std::arrive_and_drop removes itself from the synchronization mechanism.

Before I look at the std::flex_barrier and the completion phase, I will give a short example of the std::barrier.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

void DoWork() { Tasks& tasks; int n_threads; vector<thread*> workers; barrier task_barrier(n_threads); for (int i = 0; i < n_threads; ++i) { workers.push_back(new thread([&] { bool active = true; while(active) { Task task = tasks.get(); // perform task ... task_barrier.arrive_and_wait(); } }); } // Read each stage of the task until all stages are complete. while (!finished()) { GetNextStage(tasks); } } |

The std::barrier barrier in line 6 coordinates several threads that perform their tasks a few times. The number of threads is n_threads (line 3). Each thread takes its task (line 12) via task.get(), performs it, and waits – as far as it is done with its task(line 15) – until all threads have done their task. After that, it takes a new task in line 12 as far as active returns true in line 12.

std::flex_barrier

From my perspective, the names in the example of the std::flex_barrier are slightly confusing. For example, the std::flex_barrier is called notifying_barrier. Therefore I used the name std::flex_barrier.

The std::flex_barrier has, in contrast to the std::barrier an additional constructor. This constructor can be parametrized by a callable unit that will be invoked in the completion phase. The callable unit has to return a number. This number sets the value of the counter in the completion phase. A number of -1 means that the counter keeps the same in the next iteration. Smaller numbers than -1 are not allowed.

What is happening in the completion phase?

- All threads are blocked.

- A thread is unblocked and executes the callable unit.

- If the completion phase is done, all threads will be unblocked.

The code snippet shows the usage of a std::flex_barrier.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

void DoWork() { Tasks& tasks; int initial_threads; atomic<int> current_threads(initial_threads); vector<thread*> workers; // Create a flex_barrier, and set a lambda that will be // invoked every time the barrier counts down. If one or more // active threads have completed, reduce the number of threads. std::function rf = [&] { return current_threads;}; flex_barrier task_barrier(n_threads, rf); for (int i = 0; i < n_threads; ++i) { workers.push_back(new thread([&] { bool active = true; while(active) { Task task = tasks.get(); // perform task ... if (finished(task)) { current_threads--; active = false; } task_barrier.arrive_and_wait(); } }); } // Read each stage of the task until all stages are complete. while (!finished()) { GetNextStage(tasks); } } |

The example follows a similar strategy as the example to std::barrier. The difference is that this time the counter of the std::flex_barrier is adjusted during run time; therefore, the std::flex_barrier task_barrier in line 11 gets a lambda function. This lambda function captures its variable current_thread by reference. The variable will be decremented in line 21, and active will be set to false if the thread has done its task; therefore, the counter is decreased in the completion phase.

A std::flex_barrier has one specialty in contrast to a std::barrier and a std::latch. This is the only one for which you can increase the counter.

Read the details to std::latch, std::barrier , and std::flex_barrier at cppreference.com.

What’s next?

Coroutines are generalized functions that can be suspended and resumed while keeping their state. They are often used to implement cooperative tasks in operating systems, event loops in event systems, infinite lists, or pipelines. You can read the details about coroutines in the next post.

Thanks a lot to my Patreon Supporters: Matt Braun, Roman Postanciuc, Tobias Zindl, G Prvulovic, Reinhold Dröge, Abernitzke, Frank Grimm, Sakib, Broeserl, António Pina, Sergey Agafyin, Андрей Бурмистров, Jake, GS, Lawton Shoemake, Jozo Leko, John Breland, Venkat Nandam, Jose Francisco, Douglas Tinkham, Kuchlong Kuchlong, Robert Blanch, Truels Wissneth, Mario Luoni, Friedrich Huber, lennonli, Pramod Tikare Muralidhara, Peter Ware, Daniel Hufschläger, Alessandro Pezzato, Bob Perry, Satish Vangipuram, Andi Ireland, Richard Ohnemus, Michael Dunsky, Leo Goodstadt, John Wiederhirn, Yacob Cohen-Arazi, Florian Tischler, Robin Furness, Michael Young, Holger Detering, Bernd Mühlhaus, Stephen Kelley, Kyle Dean, Tusar Palauri, Juan Dent, George Liao, Daniel Ceperley, Jon T Hess, Stephen Totten, Wolfgang Fütterer, Matthias Grün, Ben Atakora, Ann Shatoff, Rob North, Bhavith C Achar, Marco Parri Empoli, Philipp Lenk, Charles-Jianye Chen, Keith Jeffery, Matt Godbolt, Honey Sukesan, bruce_lee_wayne, Silviu Ardelean, schnapper79, Seeker, and Sundareswaran Senthilvel.

Thanks, in particular, to Jon Hess, Lakshman, Christian Wittenhorst, Sherhy Pyton, Dendi Suhubdy, Sudhakar Belagurusamy, Richard Sargeant, Rusty Fleming, John Nebel, Mipko, Alicja Kaminska, Slavko Radman, and David Poole.

| My special thanks to Embarcadero |  |

| My special thanks to PVS-Studio |  |

| My special thanks to Tipi.build |  |

| My special thanks to Take Up Code |  |

| My special thanks to SHAVEDYAKS |  |

Modernes C++ GmbH

Modernes C++ Mentoring (English)

Rainer Grimm

Yalovastraße 20

72108 Rottenburg

Mail: schulung@ModernesCpp.de

Mentoring: www.ModernesCpp.org

Leave a Reply

Want to join the discussion?Feel free to contribute!