C++ Core Guidelines: More Traps in the Concurrency

Concurrency provides many ways to shoot yourself in the foot. The rules for today help you to know these dangers and to overcome them.

First, here are three rules for this post.

- CP.31: Pass small amounts of data between threads by value, rather than by reference or pointer

- CP.32: To share ownership between unrelated

threads useshared_ptr - CP.41: Minimize thread creation and destruction

They are more rules which I ignore because they have no content.

CP.31: Pass small amounts of data between threads by value, rather than by reference or pointer

This rule is quite apparent; therefore, I can make it short. Passing data to a thread by value immediately gives you two benefits:

- There is no sharing and therefore, no data race is possible. The requirements for a data race are mutable, shared state. Read the details here: C++ Core Guidelines: Rules for Concurrency and Parallelism.

- You do have not to care about the lifetime of the data. The data stays alive for the lifetime of the created thread. This is, in particular, important when you detach a thread: C++ Core Guidelines: Taking Care of your Child.

Of course, the crucial question is: What does a small amount of data mean? The C++ core guidelines are not clear on this point. In rule F.16 For “in” parameters, pass cheaply-copied types by value and others by reference to const to functions, the C++ core guidelines states that 4 * sizeof(int) is a rule of thumb for functions. Meaning smaller than 4 * sizeof(int) should be passed by value; bigger than 4 * sizeof(int) by reference or pointer.

In the end, you have to measure the performance if necessary.

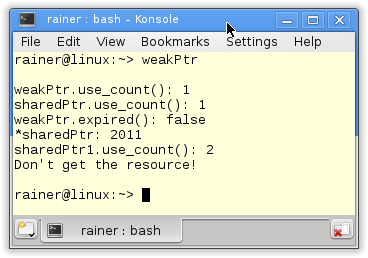

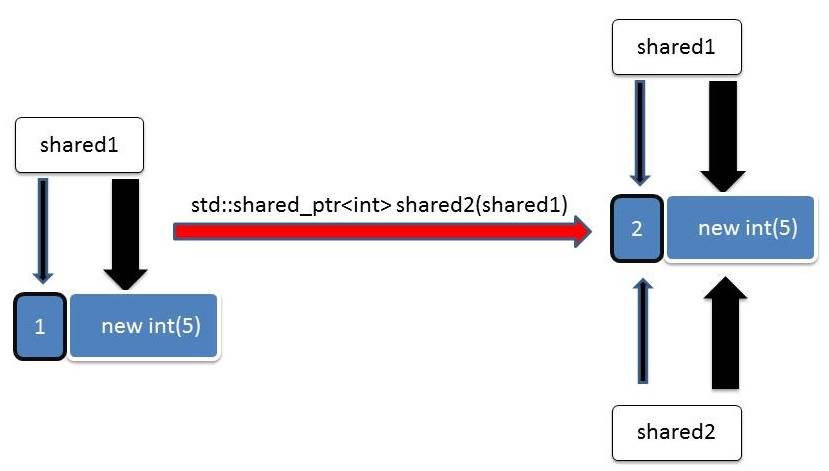

CP.32: To share ownership between unrelated threads use shared_ptr

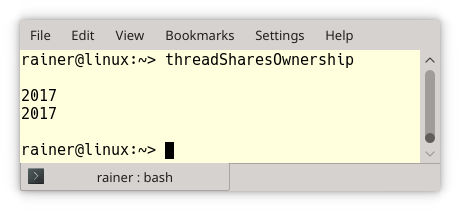

Imagine you have an object which you want to share between unrelated threads. The critical question is, who is the object’s owner and, therefore, responsible for releasing the memory? Now you can choose between a memory leak if you don’t deallocate the memory or undefined behavior because you invoked delete more than once. Most of the time, the undefined behavior ends in a runtime crash.

// threadSharesOwnership.cpp #include <iostream> #include <thread> using namespace std::literals::chrono_literals; struct MyInt{ int val{2017}; ~MyInt(){ // (4) std::cout << "Good Bye" << std::endl; } }; void showNumber(MyInt* myInt){ std::cout << myInt->val << std::endl; } void threadCreator(){ MyInt* tmpInt= new MyInt; // (1) std::thread t1(showNumber, tmpInt); // (2) std::thread t2(showNumber, tmpInt); // (3) t1.detach(); t2.detach(); } int main(){

std::cout << std::endl;

threadCreator(); std::this_thread::sleep_for(1s);

std::cout << std::endl;

}

Bear with me. The example is intentionally so easy. I let the main thread sleep for one second to be sure that it outlives the lifetime of the child threads t1 and t2. This is, of course, no appropriate synchronization, but it helps me to make my point. The vital issue of the program is: Who is responsible for the deletion of tmpInt (1)? Thread t1 (2), thread t2 (3), or the function (main thread) itself. Because I can not forecast how long each thread runs, I decided to go with a memory leak. Consequentially, the destructor of MyInt (4) is never called:

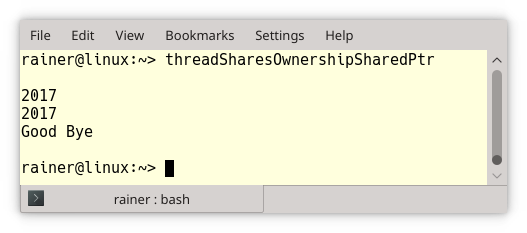

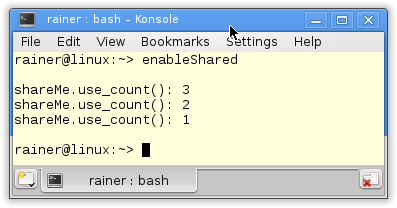

The lifetime issues are quite easy to handle if I use a std::shared_ptr.

Modernes C++ Mentoring

Modernes C++ Mentoring

Do you want to stay informed: Subscribe.

// threadSharesOwnershipSharedPtr.cpp #include <iostream> #include <memory> #include <thread> using namespace std::literals::chrono_literals; struct MyInt{ int val{2017}; ~MyInt(){ std::cout << "Good Bye" << std::endl; } }; void showNumber(std::shared_ptr<MyInt> myInt){ // (2) std::cout << myInt->val << std::endl; } void threadCreator(){ auto sharedPtr = std::make_shared<MyInt>(); // (1) std::thread t1(showNumber, sharedPtr); std::thread t2(showNumber, sharedPtr); t1.detach(); t2.detach(); } int main(){ std::cout << std::endl; threadCreator(); std::this_thread::sleep_for(1s); std::cout << std::endl; }

Two small changes to the source code were necessary. First, the pointer in (1) became a std::shared_ptr; second, the function showNumber takes a smart pointer instead of a plain pointer.

CP.41: Minimize thread creation and destruction

How expensive is a thread? Quite expensive! This is the issue behind this rule. Let me first talk about the usual size of a thread and then the costs of its creation.

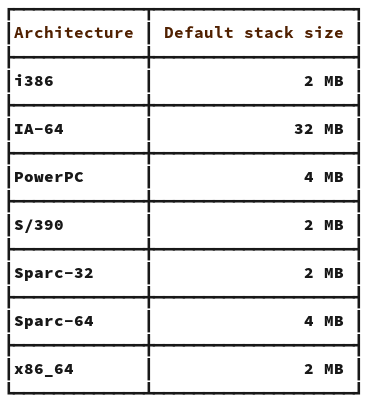

Size

A std::thread is a thin wrapper around the native thread. This means I’m interested in the size of Windows and POSIX threads.

- Windows systems: the post Thread Stack Size gave me the answer: 1 MB.

- POSIX systems: the pthread_create man-page provides me with the answer: 2MB. These is the sizes for the i386 and x86_64 architectures. If you want to know the sizes for further architectures that support POSIX, here are they:

Creation

I didn’t find numbers on how long it takes to create a thread. I made a simple performance test on Linux and Windows to get a gut feeling.

I used GCC 6.2.1 on a desktop and cl.exe on a laptop for my performance tests. The cl.exe is part of Microsoft Visual Studio 2017. I compiled the programs with maximum optimization. This means on Linux, the flag O3 and Windows Ox.

Here is my small test program.

// threadCreationPerformance.cpp #include <chrono> #include <iostream> #include <thread> static const long long numThreads= 1000000; int main(){ auto start = std::chrono::system_clock::now(); for (volatile int i = 0; i < numThreads; ++i) std::thread([]{}).detach(); // (1) std::chrono::duration<double> dur= std::chrono::system_clock::now() - start; std::cout << "time: " << dur.count() << " seconds" << std::endl; }

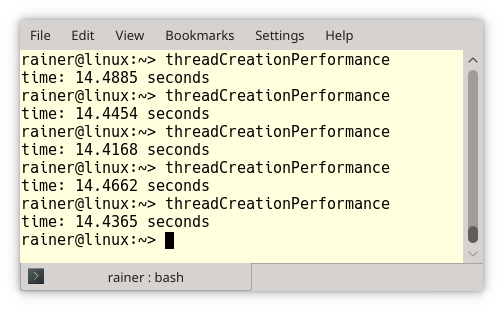

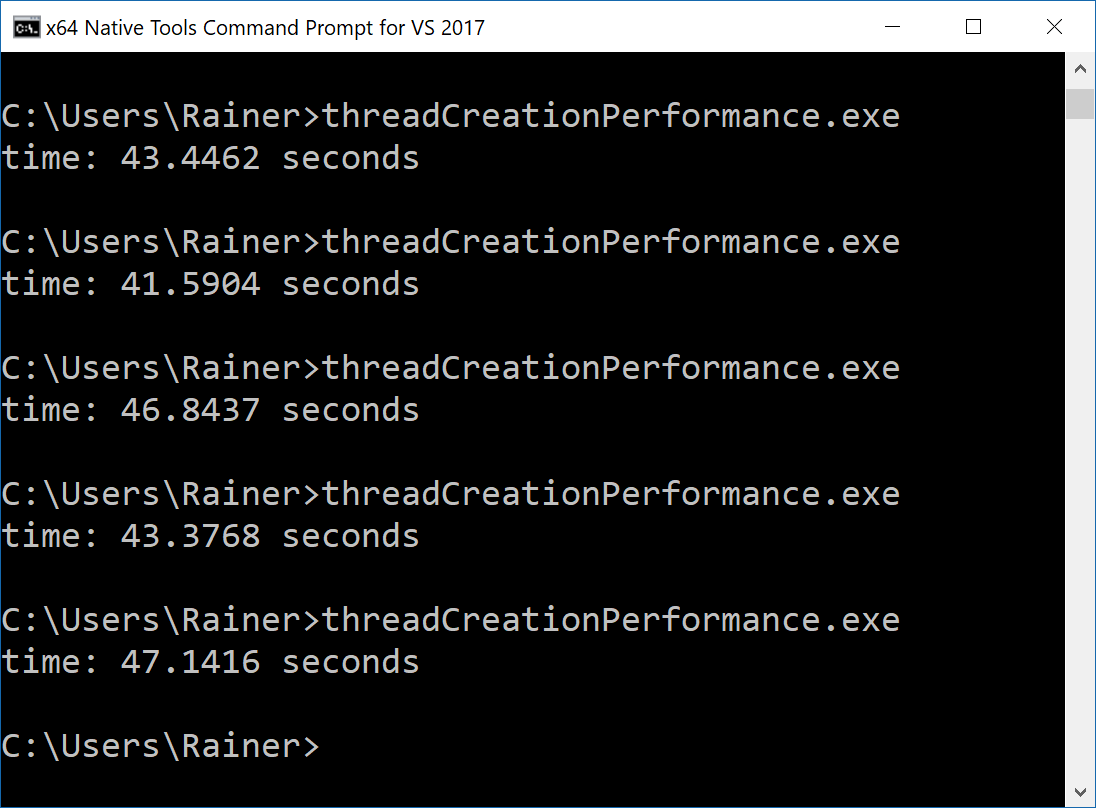

The program creates 1 million threads that execute an empty lambda function (1). These are the numbers for Linux and Windows:

Linux:

This means that creating a thread took about 14.5 sec / 1000000 = 14.5 microseconds on Linux.

Windows:

It took about 44 sec / 1000000 = 44 microseconds on Windows.

To put it the other way around. In one second, you can create about 69 thousand threads on Linux and 23 thousand on Windows.

What’s next?

What is the easiest way to shoot yourself in the foot? Use a condition variable! Don’t you believe it? Wait for the next post!

Thanks a lot to my Patreon Supporters: Matt Braun, Roman Postanciuc, Tobias Zindl, G Prvulovic, Reinhold Dröge, Abernitzke, Frank Grimm, Sakib, Broeserl, António Pina, Sergey Agafyin, Андрей Бурмистров, Jake, GS, Lawton Shoemake, Jozo Leko, John Breland, Venkat Nandam, Jose Francisco, Douglas Tinkham, Kuchlong Kuchlong, Robert Blanch, Truels Wissneth, Mario Luoni, Friedrich Huber, lennonli, Pramod Tikare Muralidhara, Peter Ware, Daniel Hufschläger, Alessandro Pezzato, Bob Perry, Satish Vangipuram, Andi Ireland, Richard Ohnemus, Michael Dunsky, Leo Goodstadt, John Wiederhirn, Yacob Cohen-Arazi, Florian Tischler, Robin Furness, Michael Young, Holger Detering, Bernd Mühlhaus, Stephen Kelley, Kyle Dean, Tusar Palauri, Juan Dent, George Liao, Daniel Ceperley, Jon T Hess, Stephen Totten, Wolfgang Fütterer, Matthias Grün, Phillip Diekmann, Ben Atakora, Ann Shatoff, Rob North, Bhavith C Achar, Marco Parri Empoli, Philipp Lenk, Charles-Jianye Chen, Keith Jeffery, Matt Godbolt, Honey Sukesan, bruce_lee_wayne, Silviu Ardelean, schnapper79, Seeker, and Sundareswaran Senthilvel.

Thanks, in particular, to Jon Hess, Lakshman, Christian Wittenhorst, Sherhy Pyton, Dendi Suhubdy, Sudhakar Belagurusamy, Richard Sargeant, Rusty Fleming, John Nebel, Mipko, Alicja Kaminska, Slavko Radman, and David Poole.

| My special thanks to Embarcadero |  |

| My special thanks to PVS-Studio |  |

| My special thanks to Tipi.build |  |

| My special thanks to Take Up Code |  |

| My special thanks to SHAVEDYAKS |  |

Modernes C++ GmbH

Modernes C++ Mentoring (English)

Rainer Grimm

Yalovastraße 20

72108 Rottenburg

Mail: schulung@ModernesCpp.de

Mentoring: www.ModernesCpp.org

Leave a Reply

Want to join the discussion?Feel free to contribute!